Table of Content

Blog Summary:

Retrieval-augmented generation (RAG) enhances AI capabilities by combining large language models with real-time data retrieval. By providing factual information, it boosts the output’s accuracy and relevance. This blog explores how RAG works in real life and compares it with traditional LLMs. It concludes by highlighting Moon Technolabs’ expertise in delivering RAG-powered solutions tailored for intelligent business applications.

Table of Content

Traditional large language AI models use pre-trained models as their knowledge base. Hence, they learn from a fixed dataset to fetch information from the knowledge base and store it in parameters. Once trained, their knowledge base effectively freezes.

In contrast, AI models with Retrieval-Augmented Generation (RAG) don’t need constant retraining. When asked a question, RAG retrieves information from these separate knowledge bases and uses it to respond immediately.

Hence, when new developments or technological breakthroughs occur in today’s dynamic market, RAG use cases enhance the accuracy and relevance of responses.

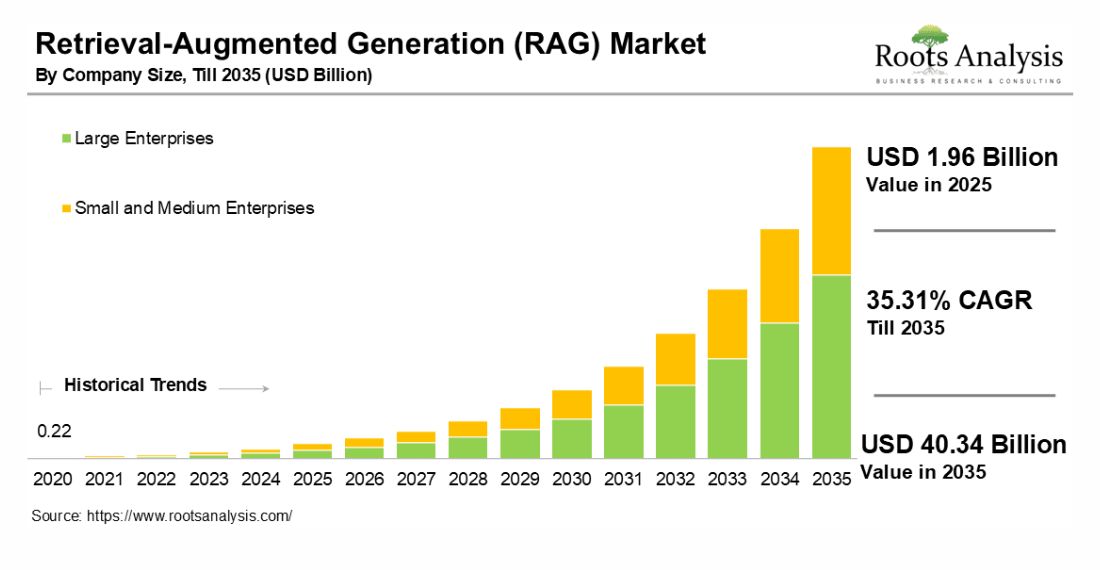

This is the primary reason why the RAG market size is expected to increase from USD 1.96 billion in 2025 to USD 40.34 billion by 2035, as predicted by the Grand View Research.

In this blog, we’ll explore more about how RAG works in real-world applications through some use cases. Let’s head on!

Let’s understand RAG through the example of a university planning a new curriculum for its students. The faculty wants to incorporate the latest research papers, case studies, industry reports, and alumni insights into the course.

With traditional AI models, they would have to retrain an AI curriculum advisor whenever any new information appears. However, with RAG models, the retrieval component pulls in the latest relevant information from newly published documents.

Similarly, the generation component combines the built-in knowledge base with fresh external knowledge and produces an answer without retraining.

Suppose a student searches “data science jobs”. With the semantic retriever, RAG AI might score highly in an industry report titled “Emerging Machine Learning Roles in 2025.” RAG allows AI to fetch broad but related keywords (information) like ‘machine learning’ when searching for “data science.”

Hence, with the RAG model, faculty can simply add new papers and reports to the search index. The AI would immediately use them to help the university design a better curriculum by dynamically pulling the latest research and feedback.

Here’s a short overview of how traditional AI models differ from RAG AI models:

| Capabilities and Features | Traditional AI LLMs | RAG AI Models |

|---|---|---|

| Knowledge base | Built during training – static | Built-in base + external base – dynamic |

| Knowledge base updation | Requires fine-tuning or retraining | No retraining required. Only update the document index |

| Real-time information | Limited | Pulls in the latest documents |

| Factual accuracy | Degrades over time | Stays accurate by retrieving fresh data |

| Context size | Limited by the model | Dynamically extends as per the retrieved documents |

| Domain adaptability | Requires domain-specific fine-tuning | Adapts using a domain-specific retrieval base |

| Search capabilities | Doesn’t have any external search | Uses semantic search for the most relevant content |

| Explainability | Hard to trace output sources | Easily points out a document as a source |

| Use case | Basic Q&A and summarization with known data | Factual Q&A and research assistance, |

It ensures that the new curriculum includes contextually relevant material that can be updated today and beyond. Hence, a RAG workflow looks like this:

Cut the guesswork and build AI models that generate better answers by retrieving verified data before generating responses.

Compared to traditional AI LLMs, RAG models are not limited by input size and can use thousands of data points or tokens. They don’t forget older context, and even if they are fed an entire digital repository of research papers, they can easily respond to common questions with access to updated domain-specific knowledge.

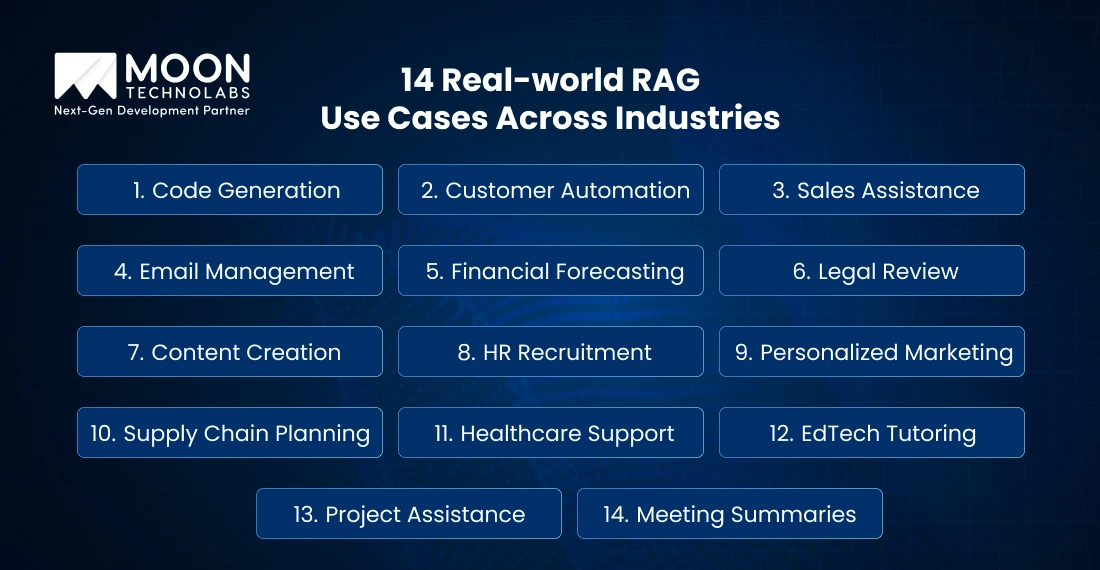

Let’s understand some RAG use cases in real life across industries:

RAG helps automate code generation to develop better software by retrieving updated libraries for developers’ queries about specific functionality, code snippets, and fixing errors.

Real-life RAG Use Case: GitHub CoPilot uses RAG for code suggestions

Recommended Tool: CodeBERT

We have covered this use case in depth in our blog on AI in Software Development.

AI also plays a vital role in improving customer service through virtual assistants and AI chatbot integration. Powered by advanced AI development tools, these platforms deliver fast, context-aware responses and manage routine tasks efficiently.

Real-life RAG Use Case: Zendesk uses RAG for smarter ticket resolution.

Recommended Tool: LangChain

Which other tools drive these intelligent responses? Explore our detailed guide on AI development tools.

In B2B sales, RAG helps companies automatically fill out proposal and request forms and enhance lead prioritization processes so that sales representatives can score leads.

Real-life RAG Use Case: Salesforce uses RAG to provide insights on leads.

Recommended Tool: Sentence-BERT

RAG helps draft better email templates and manage correspondence better by crafting tailored follow-up emails for clients based on past interactions.

Real-life RAG Use Case: Grammarly uses RAG to adjust the email tone.

Recommended Tool: T5 Model

RAG assists in analyzing market data, enabling analysts to make accurate investment decisions, and provides predictions of trends from live feeds.

Real-life RAG Use Case: Bloomberg Terminal for market insights.

Recommended Tool: Cohere Embeddings

RAG speeds up legal drafts by retrieving cases faster, which helps lawyers find precedents for contract disputes, legal decisions, and compliance.

Real-life RAG Use Case: LexisNexis applies RAG for legal analysis.

Recommended Tool: BM25

RAG enhances the content creation process by providing accurate and contextually aware content, optimizing it for SEO for marketers creating blogs, and streamlining the research with the latest and updated information.

Real-life RAG Use Case: Jasper uses RAG to create content.

Recommended Tool: RoBERTa

In AI agent development for HR onboarding, RAG helps streamline the data retrieval process for candidates by pulling relevant resumes for a role.

Real-life RAG Use Case: LinkedIn uses RAG for talent matching.

Recommended Tool: Elasticsearch

RAG helps marketers build personalized campaigns by targeting customers with dynamic insights, using browsing histories and user patterns to create ad content.

Real-life RAG Use Case: HubSpot integrates RAG for personalized campaigns.

Recommended Tool: FAISS

See how AI personalizes marketing at scale in our blog on AI in SaaS.

In supply chains, RAG helps optimize the logistics planning process by providing AI prompt engineering services for routes based on real-time traffic data.

Real-life RAG Use Case: DHL uses RAG for optimizing delivery routes

Recommended Tool: Graph Retrieval

RAG assists in accurate diagnosis with generative AI integration by retrieving accurate medical data for doctors and provides tailored treatment plans from patient records.

Real-life RAG Use Case: IBM Watson Health uses RAG for medical insights.

Recommended Tool: Dense Passage Retrieval (DPR)

Looking to build precision-driven AI healthcare solutions? Explore our Generative AI Development Services tailored for real-time, data-backed medical decisions.

RAG helps deliver personalized learning by providing updated resources for students, including custom explanations of subjects, case studies, concepts, and papers.

Real-life RAG Use Case: Pearson uses RAG for adaptive learning.

Recommended Tool: Vector Search

In project management, RAG assists teams in better planning their projects by retrieving past project data and forecasting timelines using historical data reports.

Real-life RAG Use Case: Asana leverages RAG for task insights.

Recommended Tool: Llama Index

RAG can be used effectively to summarize meetings with key points, generate transcriptions for strategy sessions, and build plans for further topics.

Real-life RAG Use Case: Otter.ai uses RAG to enhance transcripts.

Recommended Tool: Whisper

RAG works on semantic retrieval, which means it searches by meaning. In place of matching simple keywords, RAG embeds the question and compares it to the embedded documents.

Let’s understand the top 5 benefits of implementing RAG models:

Standard AI models only use information included in their training data. RAG converts the query and documents into vector embeddings, pulling those with similar vector representations and nearest neighbors.

For example, the university builds a chatbot on an RAG LLM for the students. If a student asks what courses alumni say they needed last semester, they will get the right, most updated, and factual information.

Since it’s trained on knowledge from last year, and also retrieves relevant information from an external base, it doesn’t cram all the knowledge into the model. RAG overcomes such limitations by augmenting its context on demand.

Suppose the university faculty asks the AI model, “Which topics should our new marketing course include?” RAG acts like a smart researcher: It “reads” the latest papers on demand and generates an answer.

In this detailed guide, we have explored how you can fine-tune an AI model.

It uses a retrieval model to search the knowledge base, which consists of recent research, reports, and surveys, and returns the most relevant documents along with the original query. Hence, RAG has both its built-in expertise and fresh documents as context to craft the most accurate answer.

For organizations looking to leverage this capability, generative AI consulting services can help align RAG with strategic business goals through tailored AI strategy development.

Let us help you build a next-generation AI model with RAG for trustworthy, smarter, and dynamic responses.

Specializing in RAG-powered AI LLMs to build tailored and industry-specific solutions across healthcare, legal, finance, and customer support, we aim to deliver secure and scalable conversational systems.

At Moon Technolabs, our AI and ML experts leverage custom retriever-generator architectures and advanced semantic search integration to operationalize your organization’s knowledge assets.

Whether you plan to integrate RAG into existing platforms or create standalone applications, we have a solid track record of developing intelligent and dynamic solutions that fully align with your unique business needs.

Make us your trusted partner in developing custom AI solutions to guide your RAG initiatives from proof-of-concept to production with technical expertise and strategic support.

The essence of the RAG LLMs lies in how it lets the AI treat its retrieved documents. By extending its working memory, it combines them with its pre-trained skills to generate richer, more accurate answers.

Hence, throughout the process, the text or raw data is easily available information for the model as and when needed. RAG’s AI doesn’t just generate titles in its outputs; it reads and synthesizes the information. By combining its training with retrieved texts, it provides factual and detailed information.

Is your organization planning to implement RAG to update your AI knowledge base?

Connect with our experts for a FREE consultation.

01

02

03

04

05

Submitting the form below will ensure a prompt response from us.