Table of Content

Blog Summary:

This blog compares both AI models to help you decide which fits your needs. We compare them based on several factors, apart from explaining their benefits, use cases, and scenarios to use. Let’s start reading.

Table of Content

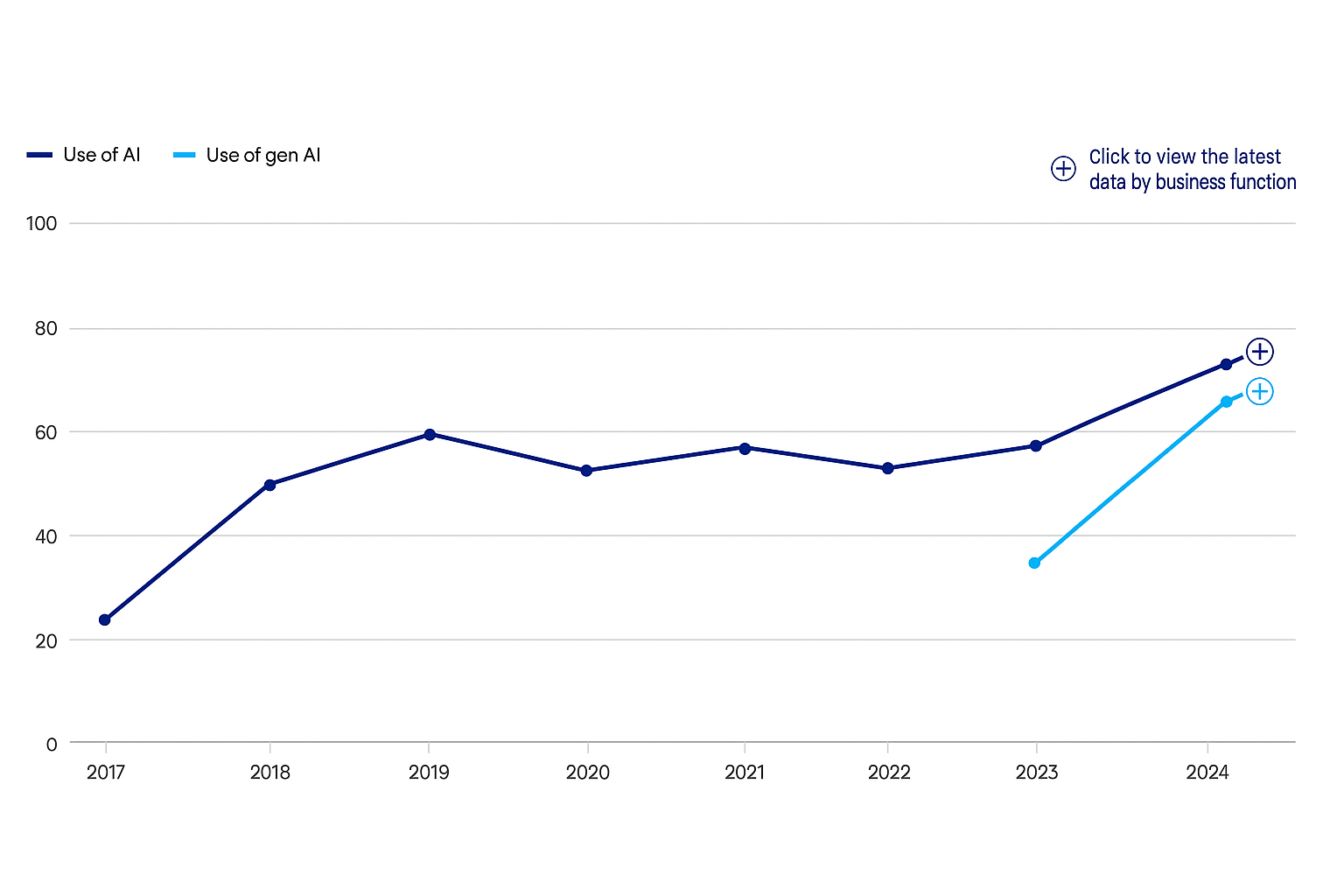

AI continues to redefine multiple industries. The majority of businesses have adopted it to transform their operation by improving efficiency. According to McKinsey, 78% of organizations harness the potential of AI to at least one of their functions. While looking for AI models, most businesses often come across Mistral and Llama, which are two emerging large language models (LLMs).

So, which one has an edge over the other when it comes to Mistral vs Llama? Both models have unique strengths. Mistral is the right option for resource efficiency and agility, whereas Llama is good for broad model variants and scalable performance.

In this blog, we will compare both AI models across criteria such as accuracy, speed, scalability, resource efficiency, and business-specific use cases. It helps you understand the right model to implement for your business. Let’s explore.

As an open-source, robust large language model, Mistral offers improved scalability, efficiency, and speed. It offers outstanding performance while minimizing computational requirements.

This model supports a range of applications, including code completion, text generation, conversational AI, and more. These make it a perfect choice for researchers, developers, and enterprises seeking cost-effective, flexible AI solutions.

Mistral offers numerous advantages, from its lightweight architecture to support for multilingual capabilities. Let’s explore some of its top benefits in detail:

Mistral is designed perfectly to offer higher efficiency, speed, and performance. It boasts an optimized architecture that ensures fast inference and training even without compromising the final output quality. That’s why it’s the perfect option for any real-time application.

Since Mistral is an open-source model, it benefits from the worldwide developer community. They contribute actively to its continuous improvement. This ensures bug fixes, frequent updates, access to next-generation tools, etc.

With a flexible design, Mistral enables smooth integration across multiple platforms, environments, frameworks, and more. It has immense potential to adapt effortlessly across on-premises setups, cloud infrastructure, and edge services.

Mistral emerges as a top choice for scalable implementation. That’s why it’s suitable for both startups and even large enterprises. By using its modular architecture, businesses can maximize their workloads even without infrastructure upgrades.

This language model doesn’t require any computational resources to achieve powerful results. It features a lightweight design that minimizes overall operational costs, making advanced AI highly accessible to businesses of all sizes without the need for expensive cloud infrastructure or high-end GPUs.

Mistral is used for a range of purposes, including code generation, text summarization, and more. Let’s find out some of the popular use cases of this large language model:

As a robust content creation tool, Mistral can generate contextual, relevant text. It can generate top-quality content without taking too much time, whether it’s promotional copy, blog posts, or product descriptions.

Mistral also helps software developers as a powerful coding assistant. It can suggest autocomplete functions and code snippets, and detect potential bugs to streamline the entire development workflow.

Mistral offers extensive conversational capabilities and is considered the top choice for developing virtual assistants and sophisticated chatbots.

With an immense understanding of natural language, it enables improved customer engagement, human-like interactions, automated support, and more.

By leveraging the true potential of Mistral, researchers and analysts find it convenient to extract important insights from the textual information or even the large datasets.

They can use this model to accomplish tasks such as generating hypotheses, summarizing findings, conducting literature reviews, and more.

Since Mistral supports multiple languages, it’s considered a perfect choice for global applications. It has an unmatched ability to understand, generate, and translate text in multiple languages. It thus helps international businesses with cross-border AI solutions and multilingual content platforms.

Businesses can easily fine-tune this model to match their specific needs. With the custom training, the model can align perfectly with domain-specific language, proprietary data, specialized workflows, and more.

These deliver tailored AI solutions that boost both operational efficiency and competitive advantage.

Llama is another open-source large language model known for its scalability and advanced natural language understanding. This state-of-the-art model, trained on multiple datasets, can perform NLP tasks efficiently, including content generation, conversational AI, and research applications. It’s also best for enterprises looking for customizable AI.

Using Llama makes organizations entitled to numerous benefits. The following are some of them:

Boasting next-generation natural language understanding, Llama comprehends even complex queries, interprets content, and generates responses that are indistinguishable from those of humans. It’s suitable for a wide range of applications thanks to its powerful NLP capabilities, from virtual assistants to automated customer support.

Trained on vast, diverse datasets, Llama offers a broad knowledge base across a range of domains. It improves its ability to handle industry-specific terminology, nuanced language, and real-world scenarios.

Whether it’s volume processing or enterprise-grade applications, Llama’s architecture supports everything. It helps businesses tackle even the largest workloads with greater efficiency, whether for large-scale content generation, real-time customer interactions, or advanced analytics.

With a powerful developer ecosystem, Llama makes everything quite convenient for developers, be it integration, customization, or troubleshooting. It enables rapid adoption and minimizes time-to-market for AI-driven solutions.

Llama gives enterprises and developers the freedom to fine-tune the model for specific use cases. This kind of flexibility supports domain-specific optimization, improves performance, etc. It also helps organizations to develop tailored AI solutions.

From advanced text generation to research summarization, Lalma has a variety of use cases. We will discuss some of its popular use cases:

Llama powers virtual assistants and smart conversational agents. It has a robust natural language understanding that ensures a context-aware, smooth interaction. It thus enables businesses to offer human-like and personalized communication for sales, customer support, internal knowledge management systems, and more.

Llama helps researchers perform various tasks, such as data analysis, literature reviews, training custom models, hypothesis generation, and more. Its flexibility supports both innovation and experimentation, which makes it a highly important tool for industrial and academic AI research projects.

Another use case for Llama is that it helps businesses streamline their workflows by automating content creation. It has the potential to generate product descriptions, reports, and marketing copy, automating many repetitive tasks.

Llama boasts powerful contextual reasoning and comprehension, helping extract insights from textual information and complex datasets. It can categorize, summarize, and retrieve relevant data more efficiently. It also supports research analysis, decision-making, and knowledge management.

This language model is perfect for redefining customer support systems. With unmatched conversational potential, it can handle a wide range of queries, provide detailed information, and resolve issues across multiple channels.

Whether it’s edge computing or cloud platforms, Llama ensures a smooth implementation. It enables businesses to integrate AI solutions with higher scalability, distributed AI workloads, real-time processing, and low-latency applications across different operational setups.

Deciding between Mistral and Llama should be based solely on your project’s core priorities, such as speed, efficiency, lightweight deployment, etc. Let’s go through a detailed comparison between both of them to understand the right option:

Mistral is a top choice for edge devices, resource-constrained environments, and rapid prototyping. Its architecture is well-suited to high speed while ensuring high-quality outputs, particularly for summarization, text generation, and conversational tasks.

Llama is a perfect choice for large-scale NLP applications, featuring a complex architecture that supports extensive context retention, advanced reasoning, enterprise-grade scalability, and more. It comes with a large model that can handle even intricate language tasks.

Mistral is a perfect choice for code assistance, language generation, and multilingual tasks, as it’s trained on vast datasets. It delivers a higher accuracy in the majority of NLP applications.

Llama is pretrained on diverse, large datasets and thus has a good understanding of nuance, context, and specialised domains. It helps this AI model achieve higher accuracy across different NLP tasks, particularly for enterprises that rely on contextual reasoning and precise language comprehension.

Since Mistral is known for high computational efficiency and low resource requirements, it performs better on edge devices and standard hardware. It thus minimizes overall operational costs and ensures fast response times. That’s why it’s better for fast deployments and lightweight applications.

Being resource-intensive, Llama requires cloud infrastructure or powerful GPUs to achieve optimal performance. It reduces latency in several cases while improving scalability, contextual understanding, and accuracy for large-volume enterprise applications.

Mistral has a strong community and strong collaboration through shared tools, frequent updates, etc. With community-based enhancements, it’s an apt choice for developers seeking open innovation.

With a powerful ecosystem, Llama receives extensive support through tutorials, extensive documentation, developer resources, and more. Its wide adoption in enterprise and research exponents reflects enormous integration support and access to multiple community contributions.

Mistral offers the possibility of fine-tuning for specific cases, such as rapid prototyping, lightweight applications, edge deployments, and more. Developers can leverage its flexibility to adapt the model with greater efficiency, even without significant computational overhead.

Llama offers advanced customization options through open-weight availability. It thus ensures enterprise-level optimizations, domain-specific training, specialized AI applications, and more. Its optimization capabilities make it important for businesses seeking tailored solutions, long-term AI integration, and in-depth reasoning.

Mistral offers open-source, flexible licensing options and is the best choice for businesses of all sizes, from startups to enterprises. They can use it even without any legal problems.

Available with open-weight access, Llama is perfect for customization, commercial use, and integration with enterprise systems. Meanwhile, its proper compliance with usage for operational and legal alignment.

We develop advanced AI solutions to restructure your operations, boost efficiency, and growth. Let’s revolutionize your business with smart automation.

Share your Projects Now

You can opt for Mistral while handling projects that require efficiency, speed, and lightweight deployment. Let’s explore more scenarios when you opt for this language model.

Mistral is a good option for scenarios with limited hardware resources, including mobile applications, edge devices, small-scale servers, and more.

It enables you to implement AI solutions fast without the need for cloud infrastructure or expensive GPUs. It minimizes operational costs with reliable performance.

You can go with Mistral when developers need experimentation, collaboration, and the sharing of improvements within a flourishing community. It catalyzes innovation while ensuring frequent updates and facilitating teams’ use of tools developed by the community.

With Mistral, developers can test ideas rapidly, iterate on models, and implement functional AI solutions in real-world environments. It’s fully compatible with edge computing, which ensures AI workloads run close to users.

Llama is the best match for companies that focus on high-accuracy NLP, scalability, enterprise-grade AI integration, and more. The following are some of the top scenarios where you can consider using it:

Organizations handling large-scale AI workloads can go for Llama. Be it academic research, enterprise applications, or industrial AI projects, it’s perfect for all of them.

It can scale to meet the demands of even complex operations, including automating complex workflows or generating insights.

Llama is a good choice for businesses that rely heavily on precise natural language processing, including healthcare, legal tech, and finance. They can leverage its unmatched comprehension capabilities. Llama provides outputs while ensuring accuracy and reliability.

Being highly sustainable, Llama is an appropriate choice for long-term AI deployment. It emerges as the most preferred option for businesses seeking AI solutions that deliver consistent performance, support long-term research initiatives, and maintain performance over time.

Stay in touch with us to build next-generation AI solutions to optimize processes, smart decisions, and boost business growth. We help you implement intelligent automation.

Talk to Our Experts Now

So, you may already have decided the right option for your project after going through the entire discussion of Mistral vs Llama, right? No matter which model you adopt, your final selection should be based on your project’s core requirements, type, and goal.

Partnering with Moon Technolabs, an AI development company, helps you make the right selection. We assist you in customizing and implementing the best model for your business, while ensuring a smooth integration, higher performance, and long-term scalability.

01

02

03

04

05

Submitting the form below will ensure a prompt response from us.