Table of Content

Blog Summary:

This post delves into a detailed comparison of LM Studio vs Ollama, two well-known LLM runners. We will discuss pros & cons, use cases, and more, along with a detailed comparison of several points. Read the entire blog to select the right option for your next project.

Table of Content

So, you want to build a chatbot that mimics your brand voice and runs entirely offline, even without relying on the cloud? Your chatbot should ensure the safety of your private data, have no latency, and deliver higher performance.

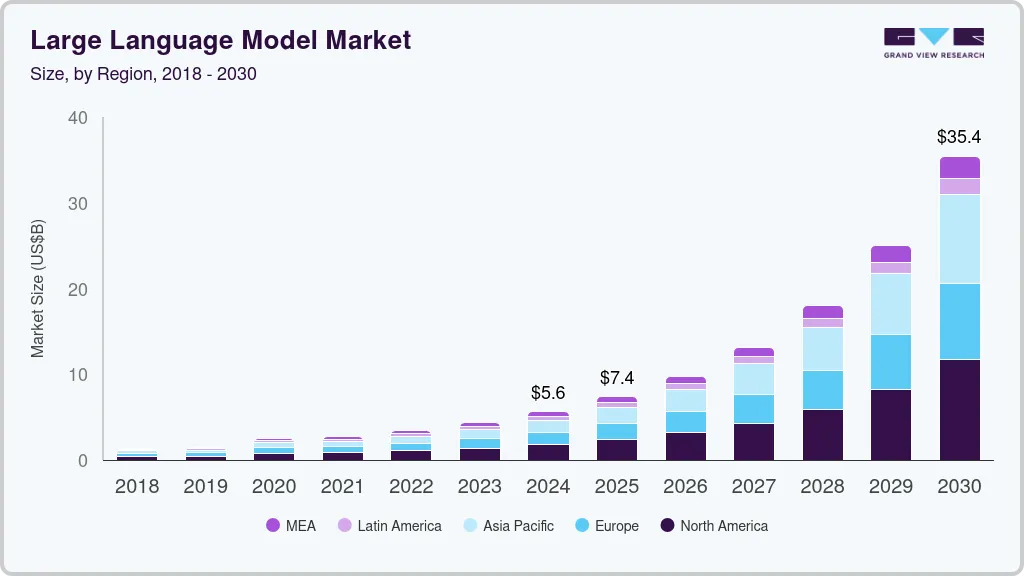

Well, this is possible by selecting the right LLM. As per the report by Grand View Research, the market size of LLM is projected at a CAGR of 36.9% during 2025-30. While selecting the top LLM models, you will come across two important options like LM Studio and Ollama.

So, which one wins over the other when it comes to LM Studio vs Ollama? Both are standout tools that let you run LLMs locally. In this blog post, we will compare the two AI tools in detail to help you choose the right one for your needs.

As a versatile local AI tool, LM Studio helps businesses implement and manage machine learning models on their own systems. Be it multi-model support, a user-friendly interface, or advanced resource management, it offers everything, including an open-source ecosystem for deployment and experimentation.

LM Studio offers numerous advantages that make it the best option for enterprises, researchers, and developers alike. Let’s understand some of the top benefits:

The most important advantage of LM Studio is that it runs AI models offline, which is useful for both data privacy and security. This makes it perfect for research projects and enterprise use cases, especially where cloud-powered solutions pose several risks.

The actual benefit of offline execution is that it minimizes latency, enabling quick responses and uninterrupted experimentation.

With its intuitive graphical user interface (GUI), LM Studio allows users to interact with it visually rather than using command-line inputs. It minimizes the overall learning curve, especially for freshers. On the other hand, it boosts productivity for experienced developers.

From custom neural networks to transformer-based larger language models, LM Studio supports a variety of model architectures. With this flexibility, users can experiment with multiple AI approaches within a single platform.

With this tool, it’s essential to manage system resources by using several powerful tools. These resources include memory optimization, GPU allocation, parallel processing, and more.

Efficient resource management is crucial for minimizing computation costs, ensuring smooth model execution, and handling multiple datasets.

As an open-source ecosystem, LM Studio ensures continuous improvement, model sharing, and encourages community collaboration. It allows businesses to customize their workflows, benefit from community-based support, contribute enhancements, and more.

From offline experimentation and privacy-focused research to enterprise deployments, LM Studio is used for a range of purposes. Let’s find out some of the popular use cases:

With LM Studio, researchers and developers can experiment with AI models in highly flexible, safe environments. Whether determining model performance or testing new algorithms, they can do it all, even without relying on cloud resources.

LM Studio’s offline execution capability is beneficial for businesses that handle sensitive data. They can run this model locally to maintain both data privacy and compliance, making it appropriate for many industries, including healthcare, finance, and government.

When it comes to learning and research, LM Studio is the best option. Whether it’s research labs or universities, they use its multi-model compatibility and intuitive interface for various purposes.

By harnessing the potential of LM Studio, developers can prototype AI applications with ease. They can use its advanced resource management and diverse model architectures to develop intelligent assistants, proof-of-concept tools, and smart apps.

LM Studio enables fine-tuning pre-trained models to meet the specific needs of businesses and researchers. They can optimize workflows and performance for unique datasets by adjusting models locally.

Though LM Studio has significant potential and offers numerous advantages, it also has several drawbacks. We will discuss some of them as follows:

Whether it’s multi-model support or a robust GUI, both are possible for LM Studio despite its heavy system resource consumption. Running large models locally requires significant GPU, CPU, and memory resources, among others.

Compared to any CLI-based platforms, LM Studio provides only limited automation. It also requires manual intervention for various automation options, such as batch processing, repetitive tasks, and complex pipeline automation. It affects both efficiency and large-scale workflow.

LM Studio is not suitable for those developers who work with scripting-based model management. They may find it lacks flexibility. This AI tool focuses mainly on GUI-powered operations that make integration into custom development pipelines or automated scripts more challenging.

Being a lightweight local AI tool, Ollama helps developers run machine learning models properly. It works on Linux, macOS, etc., and yields a simple CLI-based setup, fast inference, smooth integration, and more with large language models.

Ollama offers numerous advantages for local AI development. Let’s understand some of the most promising benefits:

Emphasizing efficiency and simplicity, Ollama lets developers create AI models quickly through its command-line interface (CLI). It removes complex configurations, ensuring fast experimentation and implementation, especially for those versed in a developer-centric workflow.

With its lightweight, fast inference, Ollama performs better even on systems with limited resources and delivers outstanding performance. It minimizes latency with lower overhead, making it ideal for prototyping, testing, and small-scale production environments where both responsiveness and speed are important.

Another major advantage of Ollama is that it can integrate easily with many top and large language models (LLMs). And thus it gives developers complete access to different types of AI capabilities even without any compatibility issues.

Since Ollama is designed specifically for Linux and macOS, it can be installed and implemented across these platforms with higher efficiency. This optimization offers easy system resource management, stable performance, compatibility with several common development tools, and more.

By focusing on highly efficient model management, Ollama enables developers to organize, implement, and update models with less effort. With CLI-based controls, it boosts reproducibility by simplifying workflow automation and improving productivity.

From research testing and AI chatbots to API integration, Ollama is used for a range of purposes. Let’s explore some of its use cases:

Developers leverage Ollama to build virtual assistants on local machines. They can run models offline to maintain data privacy while customizing behavior for specific applications, improving responses, testing conversational AI, and more.

Ollama enables developers and researchers to experiment with and test a variety of models in a fully controlled, local environment. It ensures fast evaluation of model performance, fast iteration, and fine-tuning without using cloud infrastructure.

With an easy, fast setup, Ollama can be executed quickly. This makes it well-suited for easy deployment, especially for demonstrating many proof-of-concept projects.

It allows developers to demonstrate AI’s true capabilities to team members, clients, and others. And for this, they don’t need any complex configuration or even heavy system requirements.

By using Ollama, developers can conveniently integrate local models with APIs. It also helps them create applications that interact seamlessly with databases or existing services. This level of flexibility enables organizations to enhance their AI capabilities and deploy them into production workflows.

Developers harness its CLI-powered interface to build customized workflows tailored to their projects’ specific needs. Ollama provides developers with robust tools to streamline the entire development process and boost productivity, whether for batch inference, automated testing, or pipeline integration.

Just like its counterpart, Ollama also has certain drawbacks, in addition to its various advantages. We will discuss certain drawbacks in detail:

Since Ollama works only on Linux and macOS, it has accessibility limitations for users who use other operating systems, including Windows. Developers working on cross-platform setups may encounter numerous compatibility challenges.

Ollama relies solely on a command-line interface, unlike many other GUI-powered AI platforms. It also comes with a steep learning curve, especially for freshers or even those who are non-technical or prefer visual model management.

Another drawback of Ollama is that it lacks comprehensive enterprise-level features, such as large-scale deployment support, collaborative tools, security tools, and more. It’s not suitable for companies with complex infrastructure or extensive compliance requirements.

Now we will take a detailed look at a comparison of LM Studio vs Ollama across numerous factors. Let’s go through the following important factors to understand the actual differences between these two AI models:

Focused on GUI-based installation, LM Studio provides visual guidance throughout the step-by-step setup. Meanwhile, it’s good for users who are already versed in graphical environments. But it often takes longer, mainly due to higher dependencies and larger package sizes.

Focused mainly on CLI-based installation, it takes less time to set up, and the entire process is hassle-free. It allows developers to run models quickly, making it ideal for those who prefer command-line workflows and fast deployment.

Whether large-scale transformers or lightweight neural networks, LM Studio offers a wide range of model architectures. With its versatility, developers can experiment with a variety of frameworks, making it ideal for research projects and enterprise applications.

Ollama also supports a variety of large language models (LLMs). It’s optimized primarily for efficient, lightweight architectures and focuses on models best suited for testing and local deployment.

LM Studio uses mainly advanced resource management. It uses CPU and GPU resources efficiently to achieve high-performance model execution. Meanwhile, its multi-model support and GUI cause a higher system overhead.

Ollama comes with lightweight execution and fast inference. It provides responsive performance across various systems with limited resources, even though it can’t exploit large-scale GPU setups like its counterpart.

Whether it’s visual model management, a user-friendly interface, or easy monitoring of model performance, LM Studio offers everything to improve developers’ experience. It appeals mainly to both enterprises and beginners.

Ollama is designed exclusively for developers and provides a CLI-centric experience with scripting capabilities and straightforward commands. It ensures integration and automation within the existing development pipelines.

LM Studio supports a large number of operating systems. It works flawlessly on popular operating systems like macOS, Windows, Linux, and others. This makes it an appropriate choice for various enterprise environments.

On the other hand, Ollama has limitations in terms of compatibility with operating systems. It works only with selected operating systems, such as Linux and macOS.

Customization is possible with LM Studio. Developers can integrate it with numerous architectures and extend its functionality through its open-source ecosystem.

Ollama offers developer-friendly customization options through CLI and API integration. It allows fast adaptation to their existing workflows, even though it lacks GUI-based customization tools.

LM Studio comes with an active open-source community and forums. These offer great support for developers when troubleshooting, making the development process easier.

Ollama also comes with a powerful developer community. It provides necessary updates, proper guidance, and a shared workflow. These are beneficial for CLI-based local AI deployments.

LM Studio is the best option for GUI-focused teams. It’s good for enterprises looking for multi-OS support, resource-intensive workflows, projects requiring visual model management, and more.

Ollama is a good choice for developers seeking rapid experimentation, CLI, lightweight local deployment, and more. As mentioned, it’s best suited to Linux/macOS-centred development environments.

You can select LM Studio, especially when you need cross-platform support, ease of use, visual management, and more. Let’s understand these in more detail:

You can go with LM Studio if your development team prefers graphical interfaces over command-line tools. It includes an intuitive GUI that enables developers to analyze, manage, and implement models visually, which makes it convenient for teams to collaborate.

LM Studio is the best choice for those who prefer user-friendly operation. Be it visual management, guided installation, or multi-model support, these minimize its learning curve. It thus lets developers emphasize AI experimentation and implementation.

LM Studio is well-suited to scenarios where organizations use multiple operating systems. Apart from this, its open-source ecosystem and resource management let businesses fine-tune AI applications, implement large models, maintain efficiency, and secure workflow across multiple teams.

Ollama is the right choice for teams with limited system resources that work only on targeted operating systems. Let’s discuss when you can consider using it:

Developers who prefer command-line interfaces can go with Ollama. They can use its CLI setups to get complete control over automation, model management, and integration into custom workflows.

Ollama works better even on those systems powered by modest hardware. With the lightweight architecture, it offers minimal resource consumption and fast inference. This makes it well-suited for developers working on resource-constrained systems.

With easy setup, smooth integration, and rapid execution, Ollama enables developers to experiment and assess performance quickly. It supports agile development.

Moon Technolabs helps you choose, integrate, and build AI-powered solutions tailored to your business using the right platform.

You may have understood which AI tools could match your project requirements and purposes. Well, both LM Studio and Ollama possess unmatched AI capabilities and are well-suited to diverse workflows. Partnering with an AI app development company like Moon Technolabs helps you leverage these tools efficiently.

01

02

03

04

05

Submitting the form below will ensure a prompt response from us.